Show me an example of the effective publishing of Linked Data – That, or a variation of it, must be the request I receive more than most when talking to those considering making their own resources available as Linked Data, either in their enterprise, or on the wider web.

There are some obvious candidates. The BBC for instance, makes significant use of Linked Data within its enterprise. They built their fantastic Olympics 2012 online coverage on an infrastructure with Linked Data at its core. Unfortunately, apart from a few exceptions such as Wildlife and Programmes, we only see the results in a powerful web presence. The published data is only visible within their enterprise.

There are some obvious candidates. The BBC for instance, makes significant use of Linked Data within its enterprise. They built their fantastic Olympics 2012 online coverage on an infrastructure with Linked Data at its core. Unfortunately, apart from a few exceptions such as Wildlife and Programmes, we only see the results in a powerful web presence. The published data is only visible within their enterprise.

Dbpedia is another excellent candidate. From about 2007 it has been a clear demonstration of Tim Berners-Lee’s principles of using URIs as identifiers and providing information, including links to other things, in RDF – it is just there at the end of the dbpedia URIs. But for some reason developers don’t seem to see it as a compelling example. Maybe it is influenced by the Wikipedia effect – interesting but built by open data geeks, so not to be taken seriously.

Dbpedia is another excellent candidate. From about 2007 it has been a clear demonstration of Tim Berners-Lee’s principles of using URIs as identifiers and providing information, including links to other things, in RDF – it is just there at the end of the dbpedia URIs. But for some reason developers don’t seem to see it as a compelling example. Maybe it is influenced by the Wikipedia effect – interesting but built by open data geeks, so not to be taken seriously.

A third example, which I want to focus on here, is Ordnance Survey. Not generally known much beyond the geographical patch they cover, Ordnance Survey is the official mapping agency for Great Britain. Formally a government agency, they are best known for their incredibly detailed and accurate maps that are the standard accessory for anyone doing anything in the British countryside. A little less known is that they also publish information about post-code areas, parish/town/city/county boundaries, parliamentary constituency areas, and even European regions in Britain. As you can imagine, these all don’t neatly intersect, which makes the data about them a great case for a graph based data model and hence for publishing as Linked Data. Which is what they did a couple of years ago.

A third example, which I want to focus on here, is Ordnance Survey. Not generally known much beyond the geographical patch they cover, Ordnance Survey is the official mapping agency for Great Britain. Formally a government agency, they are best known for their incredibly detailed and accurate maps that are the standard accessory for anyone doing anything in the British countryside. A little less known is that they also publish information about post-code areas, parish/town/city/county boundaries, parliamentary constituency areas, and even European regions in Britain. As you can imagine, these all don’t neatly intersect, which makes the data about them a great case for a graph based data model and hence for publishing as Linked Data. Which is what they did a couple of years ago.

The reason I want to focus on their efforts now, is that they have recently beta released a new API suite, which I will come to in a moment. But first I must emphasise something that is often missed.

Linked Data is just there – without the need for an API the raw data (described in RDF) is ‘just there to consume’. With only standard [http] web protocols, you can get the data for an entity in their dataset by just doing a http GET request on the identifier. (eg. For my local village: http://data.ordnancesurvey.co.uk/id/7000000000002929).

Linked Data is just there – without the need for an API the raw data (described in RDF) is ‘just there to consume’. With only standard [http] web protocols, you can get the data for an entity in their dataset by just doing a http GET request on the identifier. (eg. For my local village: http://data.ordnancesurvey.co.uk/id/7000000000002929).  What you get back is some nicely formatted html for your web browser, and with content negotiation you can get the same thing as RDF/XML, JSON or turtle. As it is Linked Data, what you get back also includes links to to other data, enabling you to navigate your way around their data from entity to entity.

What you get back is some nicely formatted html for your web browser, and with content negotiation you can get the same thing as RDF/XML, JSON or turtle. As it is Linked Data, what you get back also includes links to to other data, enabling you to navigate your way around their data from entity to entity.

An excellent demonstration of the basic power and benefit of Linked Data. So why is this often missed? Maybe it is because there is nothing to learn, no API documentation required, you can see and use it by just entering a URI into your web browser – too simple to be interesting perhaps.

To get at the data in more interesting and complex ways you need the API set thoughtfully provided by those that understand the data and some of the most common uses for it, Ordnance Survey.

The API set, now in beta, in my opinion is a most excellent example of how to build, document, and provide access to Linked Data assets in this way.

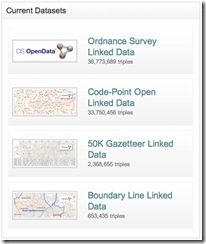

Firstly the APIs are applied as a standard to four available data sets – three individual, and one combining all three data sets. Nice that you can work with an individually focussed set or get data from all in a consolidated graph.

Firstly the APIs are applied as a standard to four available data sets – three individual, and one combining all three data sets. Nice that you can work with an individually focussed set or get data from all in a consolidated graph.

There are four APIs:

- Lookup – a simple way to extract an RDF description of a single resource, using its URI.

- Search – for running keyword searches over a dataset.

- Sparql – a fully-compliant SPARQL 1.1 endpoint.

- Reconciliation – a simple web service that supports linking of datasets to the Ordnance Survey Linked Data.

Each API is available to play with on a web page complete with examples and pop-up help hints. It is very easy and quick to get your head around the capabilities of the individual APIs, the use of parameters, and returned formats without having to read documentation or cut a single line of code.

For a quick intro there is even a page with them all on for you to try. When you do get around to cutting code, the documentation for each API is also well presented in simple and understandable form. They even include details of the available output formats and expected http response codes.

Finally a few general comments.

Firstly the look, feel, and performance of the site reflects that this is a robust serious professional service and fills you with confidence about building your application on its APIs. Developers of services and APIs, even for internal use, often underestimate the value of presenting and documenting their offering in a professional way. How often have you come across API documentation that makes the first web page look modern and wonder about investing the time in even looking at it. Also a site with a snappy response ups your confidence that your application will perform well when using their service.

Firstly the look, feel, and performance of the site reflects that this is a robust serious professional service and fills you with confidence about building your application on its APIs. Developers of services and APIs, even for internal use, often underestimate the value of presenting and documenting their offering in a professional way. How often have you come across API documentation that makes the first web page look modern and wonder about investing the time in even looking at it. Also a site with a snappy response ups your confidence that your application will perform well when using their service.

Secondly the range of APIs, all cleanly and individually satisfying specific general needs. So for instance you can usefully use Search and Lookup without having any understanding of RDF or SPARQL – the power of SPARQL being there only if you understand and need it.

The usual collection of of output formats are available, as a bit of a JavaScript kiddie I would have liked to see JSONp there too, but that is not a show stopper. The provision of the reconciliation API, supporting tools such as Open Refine, opens up access to a far broader [non-coding] development community.

The additional features – CORS Support and Response Caching – (detailed on the API documentation pages) also demonstrate that this service has been built with the issues of the data consumer in mind. Providing the tools for consumers to take advantage of web caching in their application will greatly enhance response and performance. The CORS Support enables the creation of in browser applications that draw data from many sites – one of the oft promoted benefits of linked data, but sometimes a little tricky to implement ‘in browser’.

I can see this site and its associated APIs greatly enhancing the reputation of Ordnance Survey; underpinning the development of many apps and applications; and becoming an ideal source for many people to go ‘to try out’, when writing their first API consuming application code.

Well done to the team behind its production.